“The ChatGPT moment for robotics is here.” That’s not hyperbole from a startup—it’s Jensen Huang, NVIDIA’s CEO, announcing a new era in physical AI. The company has released a suite of open models, frameworks, and infrastructure designed to help robots see, understand, and act in the physical world.

What Is Physical AI?

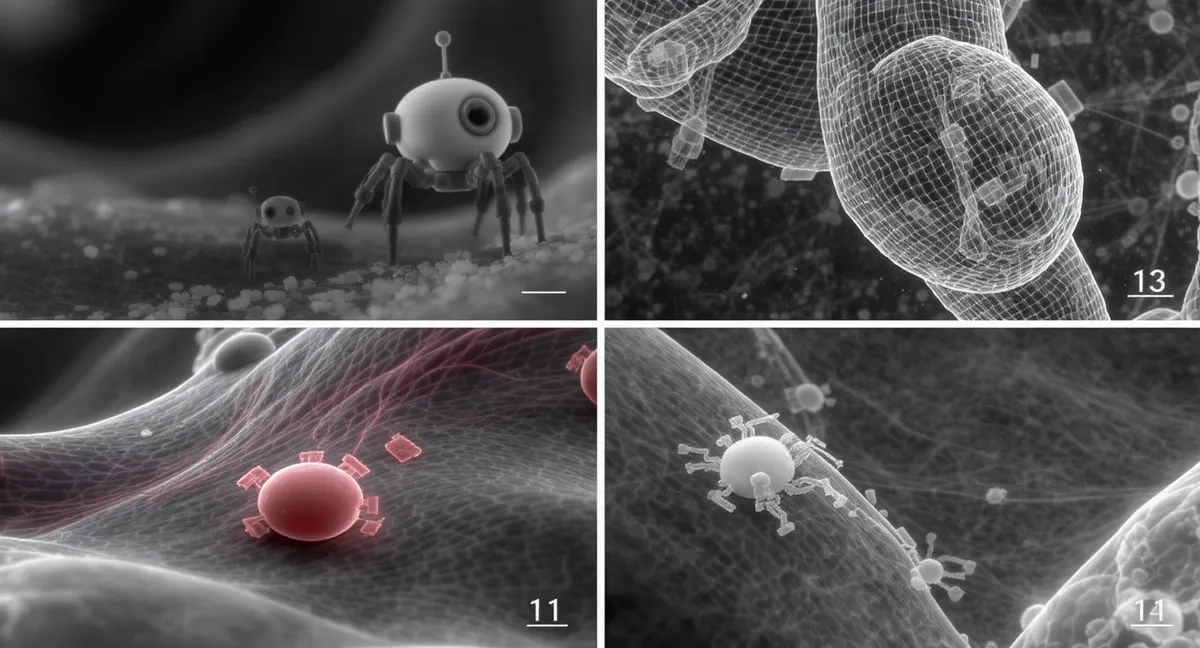

Physical AI refers to artificial intelligence systems designed to interact with the real, three-dimensional world. Unlike language models that process text or image generators that create pictures, physical AI must understand how objects move, how forces work, and how to safely manipulate physical environments.

This is harder than it sounds. A language model can recover from a wrong word, but a robot that misjudges a grab can break objects or hurt people. Physical AI requires a level of reliability and physical intuition that has historically been difficult to achieve.

The Cosmos World Models

At the heart of NVIDIA’s announcement are the Cosmos world foundation models. These come in two flavors:

Cosmos Predict 2.5

This model generates physically-based synthetic data—realistic simulations of how objects and environments behave. Robots can train on millions of scenarios without needing expensive real-world data collection.

Cosmos Reason 2

A reasoning vision-language model that enables machines to see, understand, and plan actions in the physical world more like humans do. It bridges perception (what does the robot see?) with action (what should the robot do?).

Isaac GR00T N1.6

For humanoid robots specifically, NVIDIA released Isaac GR00T N1.6—an open vision-language-action model supporting full-body control. This model integrates visual perception with action planning, helping humanoid robots move and manipulate objects more naturally.

Why This Matters

Democratizing Robotics

By releasing these models openly, NVIDIA is lowering the barrier to robotics development. Startups and researchers can build on proven foundations rather than starting from scratch.

Accelerating Development

The combination of world models (for training) and action models (for deployment) creates a complete pipeline. Developers can simulate, train, and deploy robotic systems faster than ever.

Standardization

Common tools and models help create an ecosystem where components work together. A perception system trained on Cosmos can feed into an action system built on Isaac.

Partner Announcements

NVIDIA’s announcement came alongside news from global partners developing next-generation robots. Major automotive manufacturers, warehouse operators, and manufacturing companies are all building on the NVIDIA physical AI platform.

Mercedes-Benz announced the CLA will be the first production vehicle shipping with NVIDIA’s complete autonomous driving stack, including new reasoning models for safer navigation.

The Bigger Picture

Physical AI represents a fundamental shift in what AI can do. Language models changed how we interact with information. Physical AI will change how AI interacts with the real world.

The implications extend across industries:

- Manufacturing: Robots that can adapt to new tasks without reprogramming

- Logistics: Autonomous systems navigating complex warehouse environments

- Healthcare: Robotic assistants helping with physical care tasks

- Agriculture: Machines that can identify and pick crops autonomously

Challenges Ahead

Despite the optimism, physical AI faces significant challenges:

Safety

Robots operating in human environments must be extraordinarily reliable. Edge cases that are merely embarrassing for chatbots could be dangerous for robots.

Cost

Advanced sensors and computing hardware remain expensive. Widespread deployment requires bringing costs down significantly.

Regulation

As robots become more autonomous, regulatory frameworks will need to evolve to ensure safety while enabling innovation.

The Road Forward

NVIDIA’s announcement signals that physical AI is ready for prime time—not as a distant research goal but as practical technology for near-term deployment. The tools are available. The models are trained. The ecosystem is forming.

The robotics revolution isn’t coming. It’s here.

Recommended Reading

Modern Computer Vision with PyTorch

Learn the deep learning techniques powering physical AI and robotics. Covers perception, detection, and real-world applications.

As an Amazon Associate, I earn from qualifying purchases.

How do you see physical AI changing your industry? Share your predictions in the comments below.

Comments